This group is a diverse mix of entrepreneurs, all leveraging AI in different ways. Some are seasoned veterans in sales and marketing, diving into AI for the first time. Others are AI experts, mastering the business side of things. And for some, it's all just plain fun.

But regardless of where you stand on the spectrum, we're all standing on the cutting edge of AI together.

For me, the idea of being on the cutting edge of anything felt like a distant dream—until AI started democratizing everything. AI doesn't just open doors; it tears down roadblocks. It's helping turn lofty dreams into real, tangible goals.

Why AI Matters

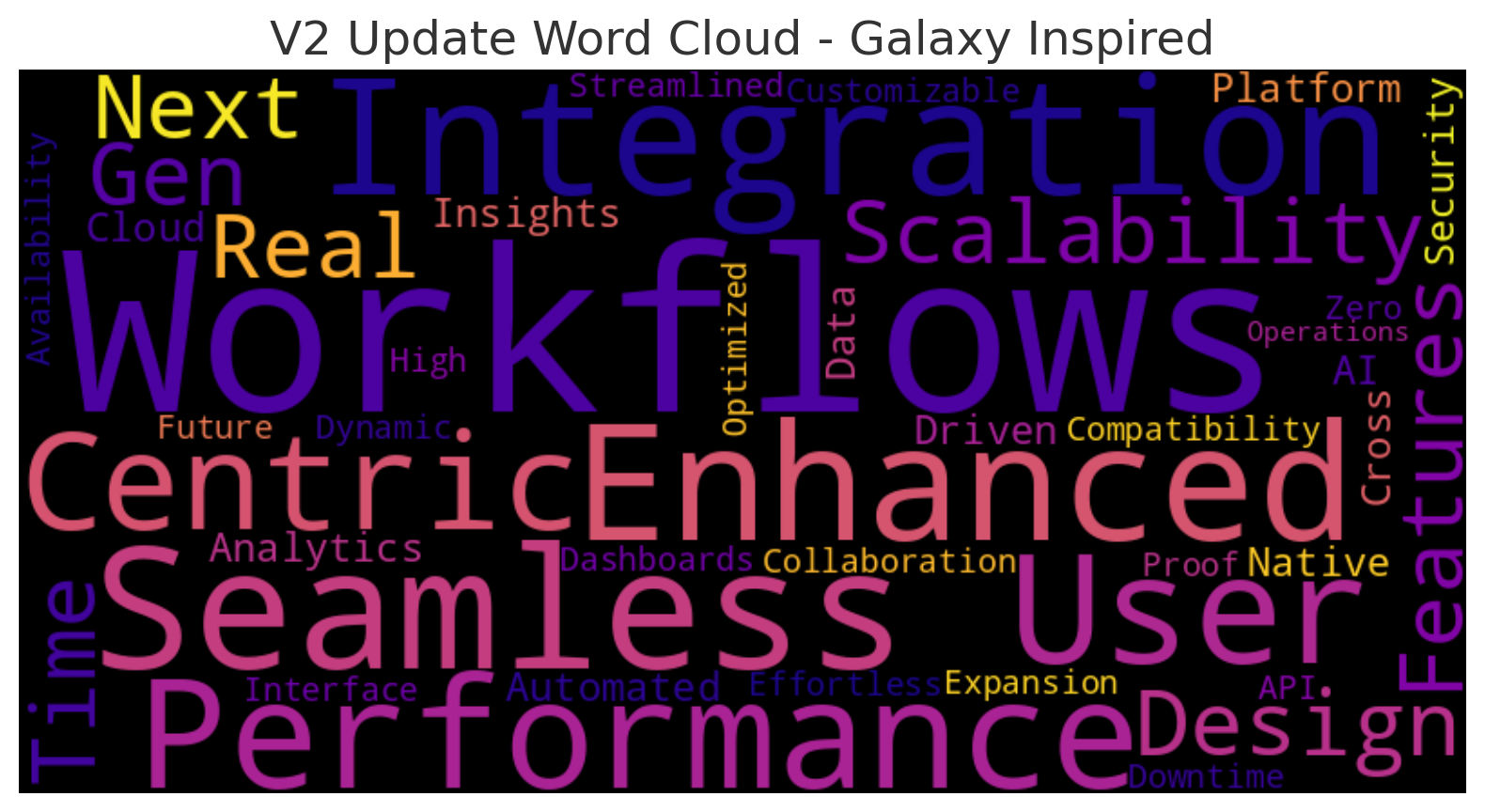

AI has this unique ability to shrink the distance between where you are now and where you want to be. It lets you see the big picture, bypass roadblocks, and zero in on your end goals with courage. It's giving synergy new meaning—real synergy, not just a LinkedIn buzzword. We've redefined "bespoke" solutions, and now, we're redefining collaboration.

AI isn't just helping us imagine new possibilities—it's helping us create them. And as we step into 2024, we're finding that even the meaning of "bespoke" has changed. It's deeper, heavier, more nuanced. We can now calculate how something like "bespoke" evolves over time, thanks to machine learning.

Why You Should Care About the Technical Side

I get it. Not everyone is interested in the technical underpinnings of AI. For many of you, the goal is simple: tangible results—better customer interactions, more efficiency, and streamlined operations. And that's totally valid. AI is a tool that can deliver those results.

But there's an advantage to going a little deeper. A solid understanding of how AI works can offer three key benefits:

Troubleshooting: When something goes wrong, understanding the principles behind AI can make it easier to diagnose and fix the issue, saving time and resources.

Explaining Value: Whether you're pitching to clients or investors, being able to articulate the value of AI-driven solutions with technical precision will make your case more compelling.

Credibility: In a world where everyone is jumping on the AI bandwagon, demonstrating a deep, well-informed grasp of the technology can set you apart.

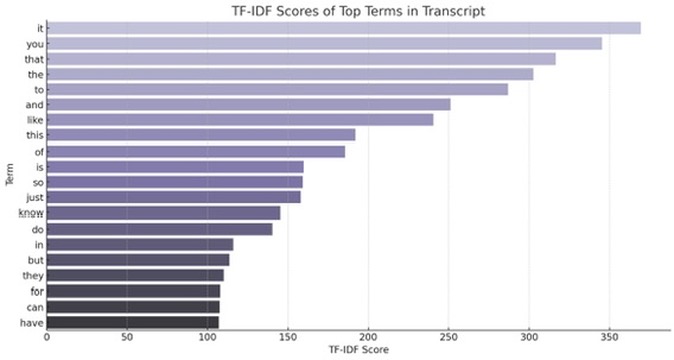

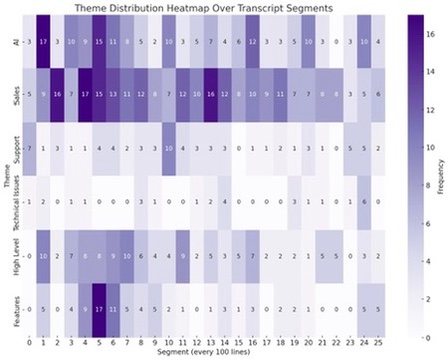

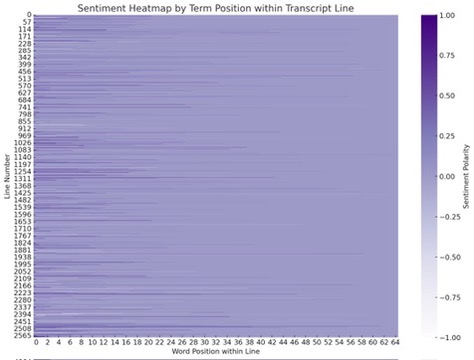

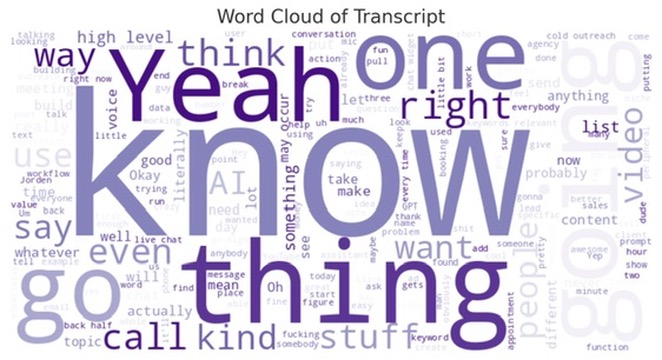

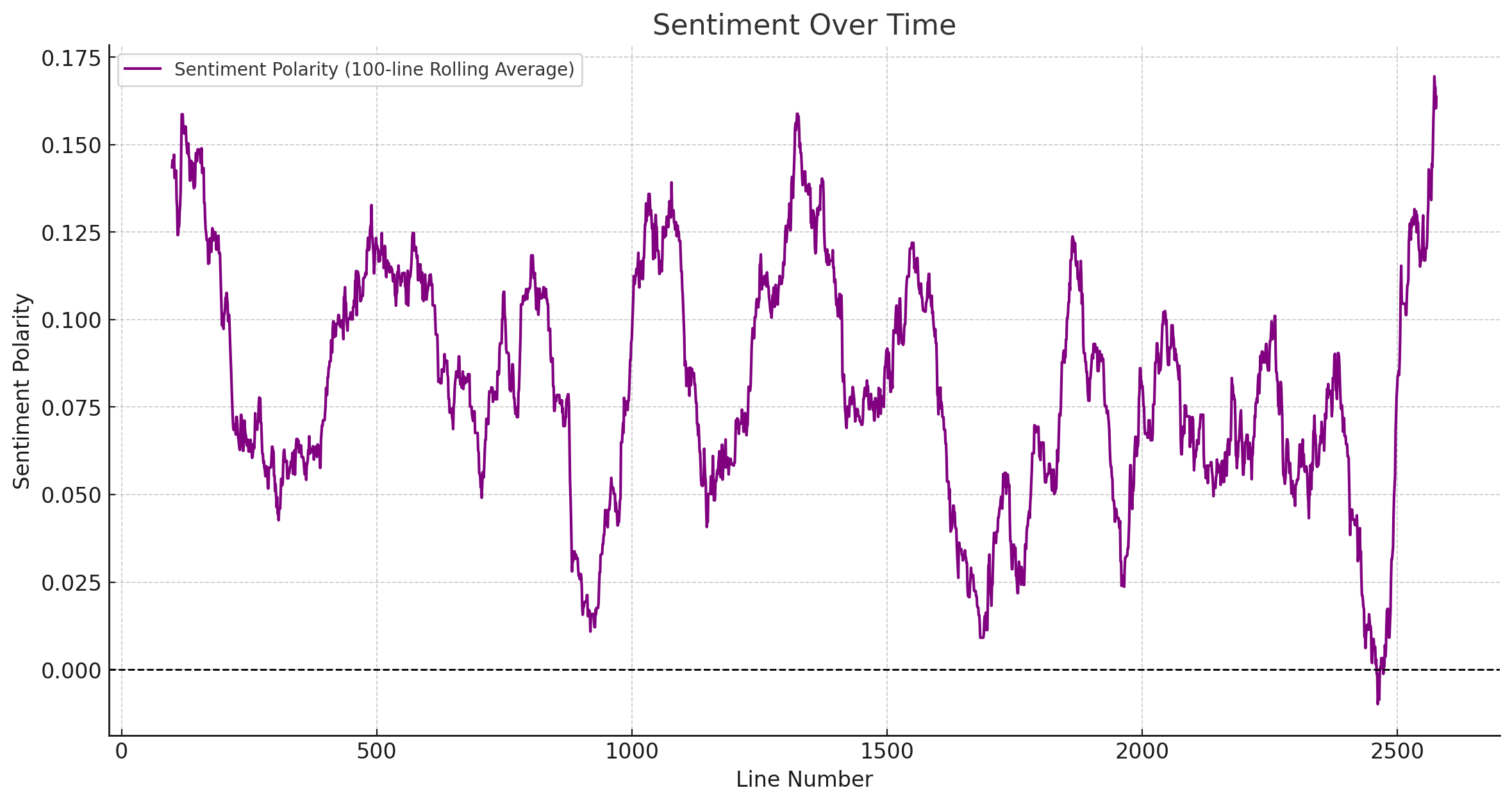

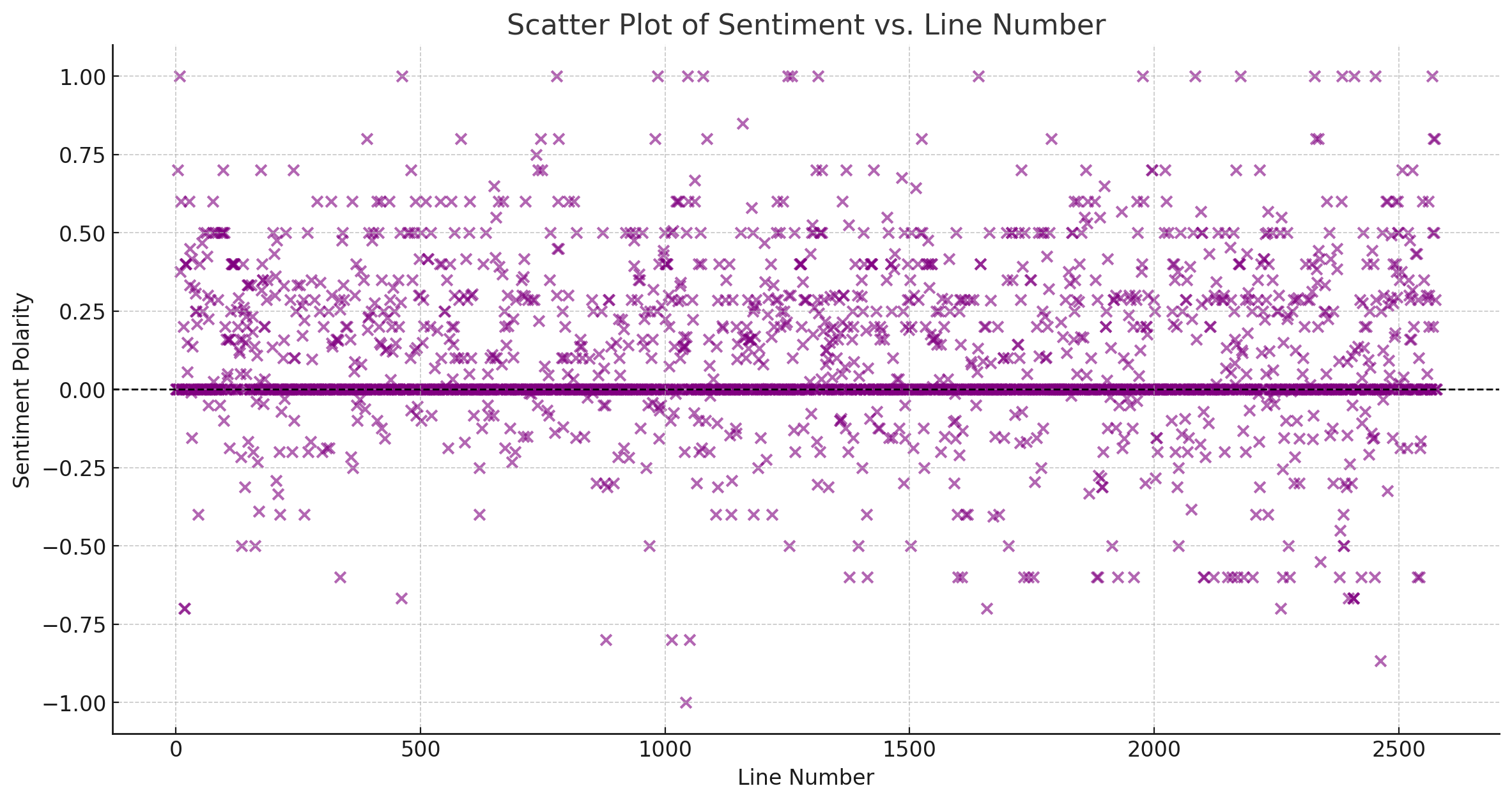

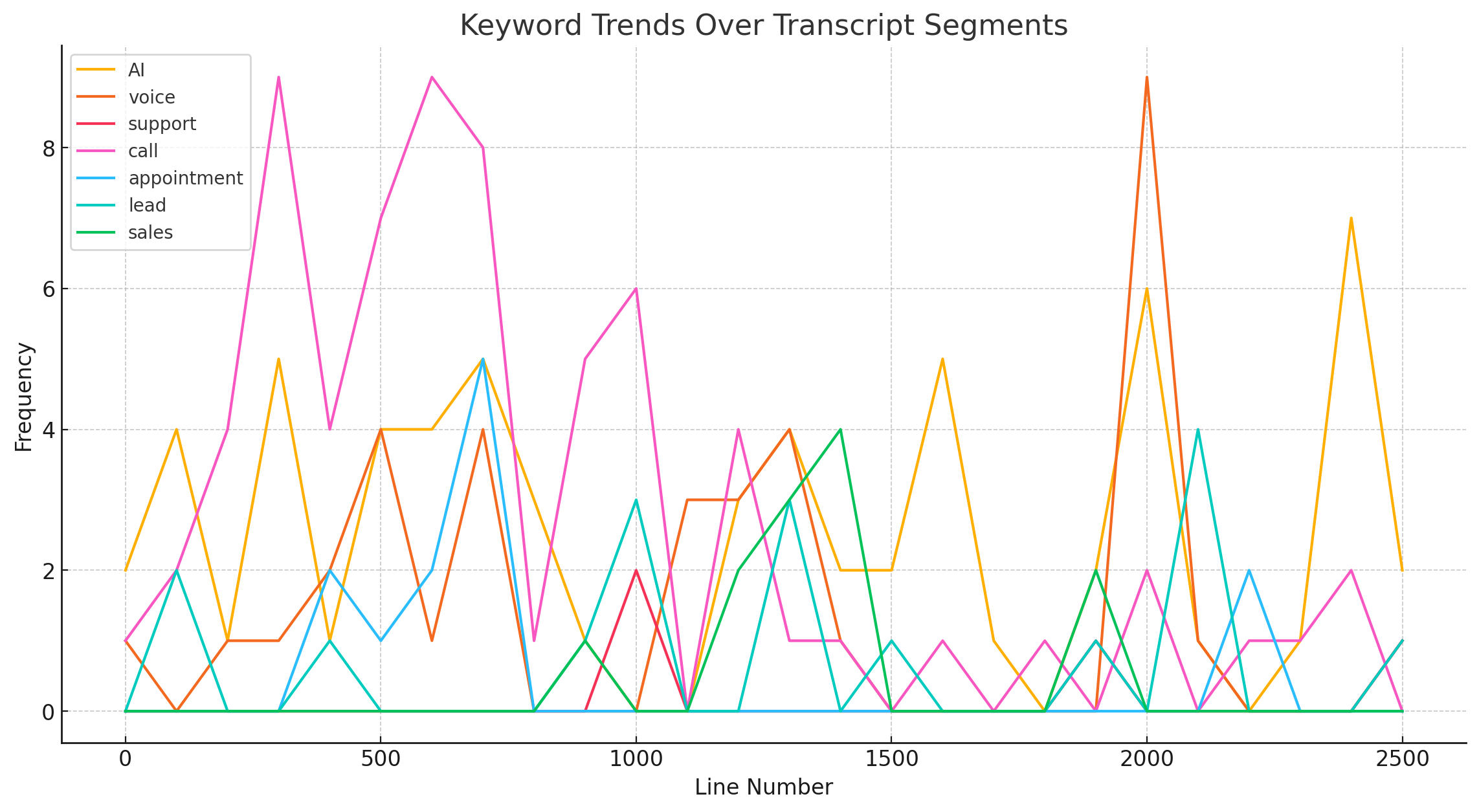

Now, let's dive into the technical details behind some of the AI systems you're interacting with every day. I pulled a transcript from an assistable.ai Town Hall meeting and we'll be breaking it down to show some foundational concepts in artificial intelligence.

Tokenization: Breaking Down Language for AI

Tokenization is the process of converting text into smaller units called tokens, which can be individual words, subwords, or even characters. This is crucial because natural language often carries meaning at multiple levels—breaking text down into tokens allows the AI model to process it more effectively.

However, tokenization is not just about chopping up sentences. It's about striking the right balance. Breaking text into words may miss subtle nuances, while breaking it into individual letters creates too much noise. Tokens offer a "happy medium" where enough context is preserved for the AI to process high-dimensional semantic meaning.

</div>

</div>